Google has extended its Project Gameface, an open-source project aimed at making tech devices more accessible, to Android, and it can now be used to control the interface of a smartphone. The project was first unveiled at Google I/O 2023 as a hands-free gaming mouse that can be controlled using head movements and facial expressions. They are designed for those who suffer from physical limitations and cannot control devices with their hands or voice. Keeping the same functionality, the Android version adds a virtual cursor so users can control their device without touching it.

In a message posted on a blog post aimed at developers, Google said: “We’re opening up more code for Project Gameface to help developers build Android apps to make every Android device more accessible. Using the device’s camera, it seamlessly tracks facial expressions and head movements, turning them into intuitive and personalized controls.” In addition, the company asked developers to use tools to add accessibility features to their apps.

The Gameface project cooperates with the Indian organization Incluzza, which supports people with disabilities. Through the collaboration, the project learned how its technology could be extended for different use cases, such as text input, job search, and more. It used the MediaPipe Face Landmarks Detection API and the Android Accessibility Service to create a new virtual cursor for Android devices. The cursor moves according to the movement of the user’s head after being tracked by the front camera.

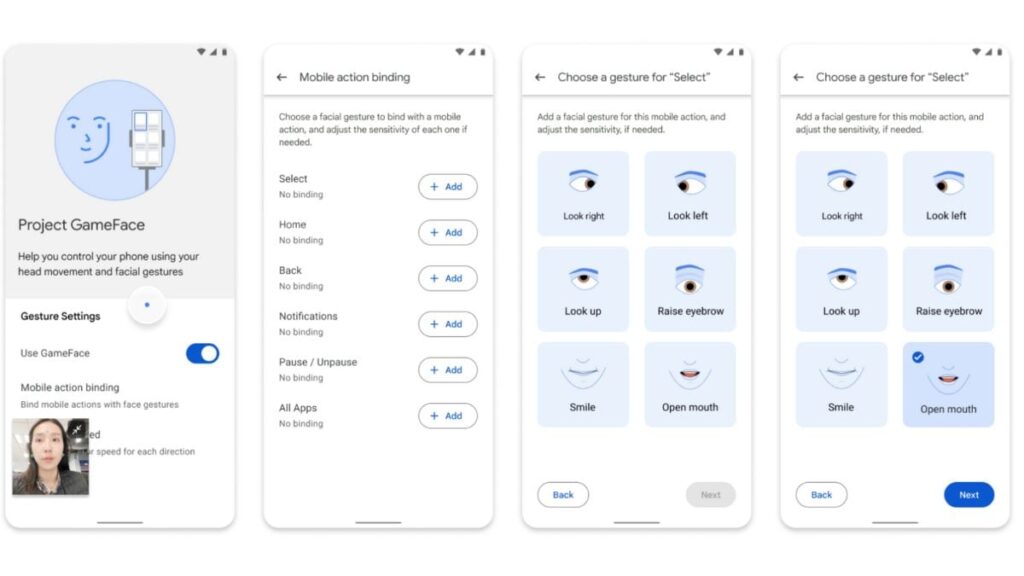

The API recognizes 52 facial gestures, including raising eyebrows, opening mouth, moving lips, and more. These 52 gestures are used to control and display a wide range of features on your Android device. One interesting feature is drag and drop. Users can use this to swipe across the home screen. To create a drag effect, users must define a start and end point. This could be something like opening the mouth and moving the head, and when the end point is reached, closing the mouth again.

Notably, while this technology was available on GitHub, developers must now build apps using this option to make it more accessible to users. Apple also recently introduced a new feature that uses eye tracking to control the iPhone.